How Can Batching Requests Actually Reduce Latency?

WEDNESDAY, DECEMBER 4, 2013 AT 8:56AM

Jeremy Edberg gave a talk on Scaling Reddit from 1 Million to 1 Billion–Pitfalls and Lessons and one of the issues they had was that they:

Did not account for increased latency after moving to EC2. In the datacenter they had submillisecond access between machines so it was possible to make a 1000 calls to memache for one page load. Not so on EC2. Memcache access times increased 10x to a millisecond which made their old approach unusable. Fix was to batch calls to memcache so a large number of gets are in one request.

Dave Pacheco had an interesting question about batching requests and its impact on latency:

I was confused about the memcached problem after moving to the cloud. I understand why network latency may have gone from submillisecond to milliseconds, but how could you improve latency by batching requests? Shouldn't that improve efficiency, not latency, at the possible expense of latency (since some requests will wait on the client as they get batched)?

Jeremy cleared it up by saying:

The latency didn't get better, but what happened is that instead of having to make a lot of calls to memcache it was just one (well, just a few), so while that one took longer, the total time was much less.

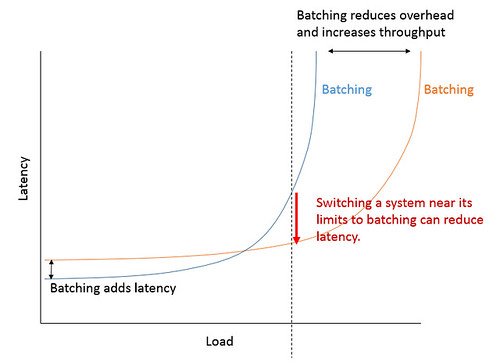

But Dave Rosenthal created a great graphic showing how batching can in fact decrease total system latency:

No comments:

Post a Comment